Introduction

Artificial Intelligence (AI) has changed the way we handle transcription. From converting podcasts to meeting recordings into text, AI transcription tools are faster and cheaper than hiring a human transcriber. But one big question remains: how accurate are AI transcription tools for noisy audio?

Accuracy is the most important factor when choosing any speech-to-text software. In a quiet environment, most AI systems perform well, giving nearly perfect results. However, the real challenge begins when you try to transcribe recordings from noisy environments like crowded interviews, busy offices, or phone calls with background chatter. That’s where issues such as transcription error rate and speech recognition accuracy become critical.

Noisy audio doesn’t just confuse the software—it affects the way words are captured, especially when multiple speakers are talking at once or when there’s heavy background noise. Some tools claim to use machine learning for speech recognition to filter out unwanted sounds, but do they really deliver?

In this blog, we’ll explore the real accuracy of AI tools in such conditions. We’ll look at the challenges of noisy audio transcription, compare popular platforms, analyze error rates, and share tips to improve transcription accuracy. By the end, you’ll know whether AI tools are reliable enough—or if human transcription still holds the edge.

Understanding AI Transcription Accuracy

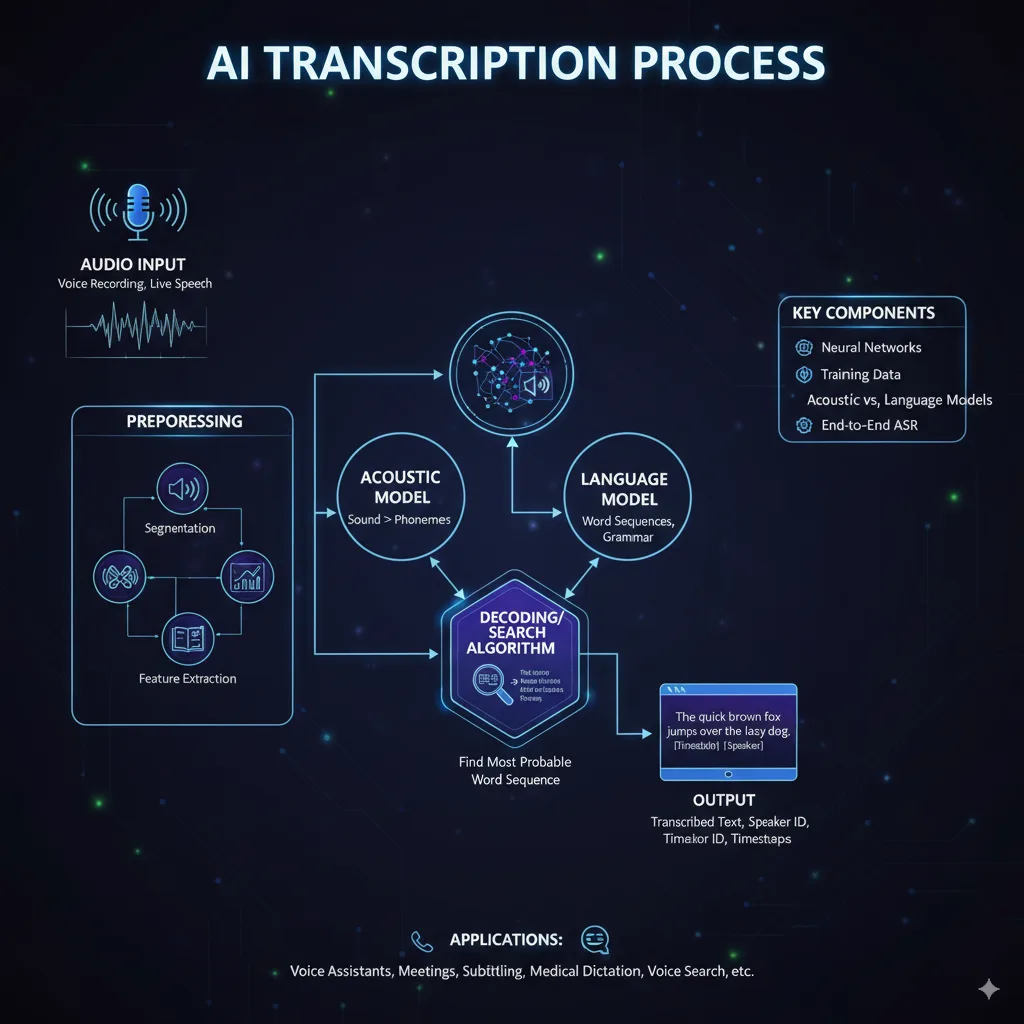

When we talk about AI transcription accuracy, we’re mainly referring to how well the tool converts spoken words into text without mistakes. The most common way to measure this is the Word Error Rate (WER). A lower WER means higher accuracy, while a higher WER shows more errors in the transcription.

In ideal conditions—like a single speaker talking clearly in a quiet room—most AI speech-to-text tools can reach 85%–95% accuracy. Some advanced platforms, such as Otter.ai or Sonix, even claim close to human-level performance. However, once we introduce noisy audio into the mix, accuracy often drops. This happens because AI systems struggle to separate the speaker’s voice from background noise.

It’s also important to compare AI vs human transcription accuracy. While humans can understand context, accents, and slang, AI relies on algorithms. For instance, a human transcriber can guess a muffled word based on sentence meaning, but AI might produce random or unrelated text.

Another factor is the dataset used to train the AI. Tools trained on clear, professional recordings may perform poorly when faced with speech to text in noisy backgrounds or strong regional accents. That’s why the transcription error rate in noisy audio is often higher than in quiet conditions.

Overall, AI is impressive, but understanding its strengths and limitations is crucial before using it for important work like legal, medical, or academic transcription.

Challenges of Noisy Audio Transcription

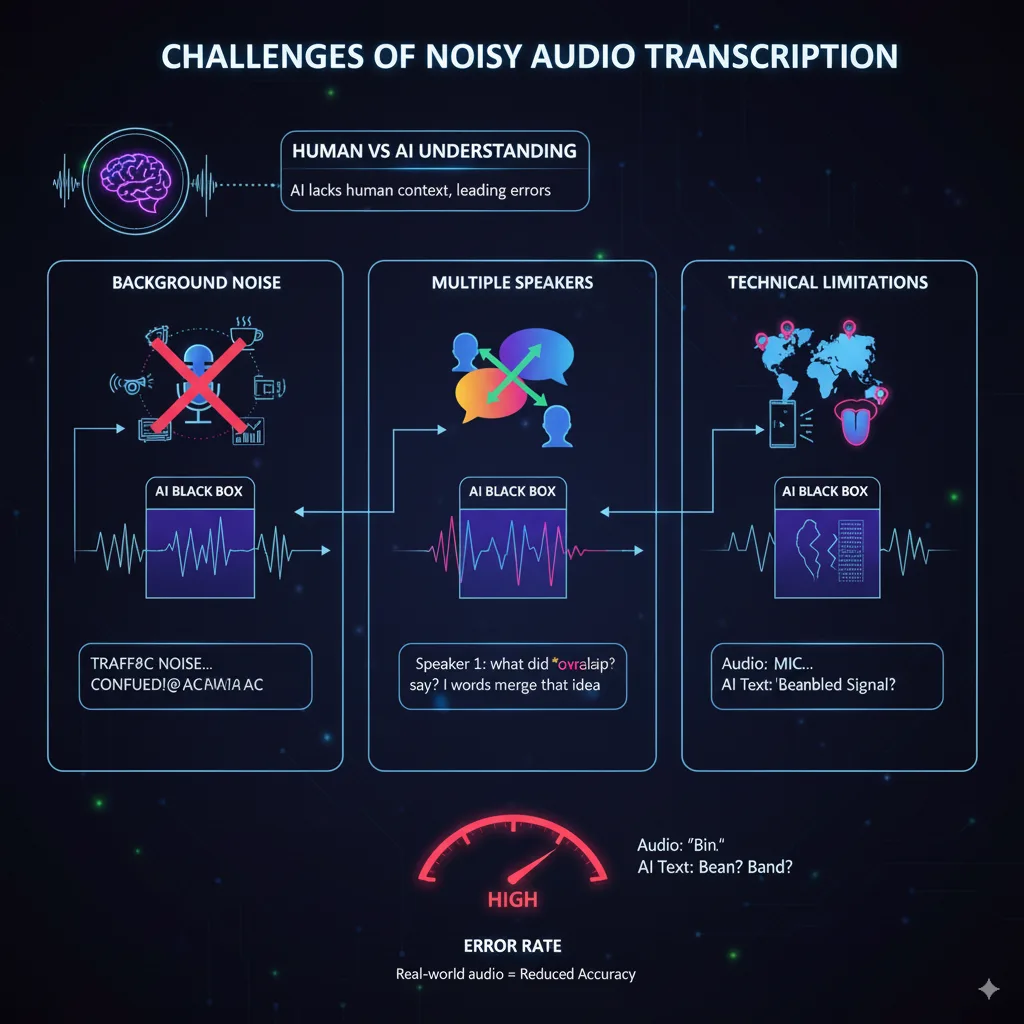

One of the biggest hurdles for AI transcription tools is handling recordings made in noisy environments. Unlike a quiet studio, real-world audio often includes unwanted sounds that interfere with speech recognition accuracy.

1. Background Noise

Everyday sounds such as traffic, typing, air conditioning, or chatter in a café can confuse the software. Even advanced tools with background noise reduction in transcription sometimes struggle when the noise level is too high.

2. Multiple Speakers

Conversations with overlapping voices make things more complicated. Voice-to-text tools for multiple speakers often misattribute words or merge them, leading to a high transcription error rate in noisy audio.

3. Accents and Pronunciation

AI systems are trained on large datasets, but they may not cover all regional accents or speech patterns. This makes it harder for automatic speech recognition accuracy to remain consistent.

4. Technical Limitations

Poor microphone quality, low recording bitrate, or distorted sound can worsen results. Even the best AI transcription software for interviews will fail if the audio input is unclear.

5. Context and Meaning

Unlike humans, AI doesn’t understand meaning—it only predicts words. In noisy situations, this often leads to funny or irrelevant phrases in the output. That’s why human vs AI transcription accuracy is still a big debate.

Key Factors That Affect Accuracy in Speech to Text in Noisy Backgrounds

The performance of AI transcription tools depends on more than just the software. Several external and internal factors directly influence how accurate your transcript will be, especially in noisy audio environments.

1. Recording Quality

A clear audio input is the foundation of accurate transcription. A poor-quality microphone or low-bitrate recording will increase the transcription error rate in noisy audio. Investing in a good microphone with built-in noise cancellation can make a huge difference.

2. Background Noise Reduction

Some tools are built with background noise reduction in transcription, but their success often depends on how well the noise differs from the speaker’s voice. If background sounds are too similar (like multiple people talking), even advanced speech recognition software performance will drop.

3. Number of Speakers

Conversations with multiple people are harder to process. While voice-to-text tools for multiple speakers now offer speaker identification, overlapping dialogues still reduce AI transcription accuracy.

4. Accents, Language, and Vocabulary

Different accents, fast speech, or technical jargon can confuse AI. While machine learning for speech recognition is improving, many tools still fail to adapt without specialized training.

5. Tool’s Algorithm & Training Data

Not all tools are created equal. Some AI transcription software for interviews and AI tools for podcasts transcription are trained on diverse datasets, making them better at handling messy audio. Others trained on clean studio recordings may fail in real-life conditions.

Best AI Transcription Software for Interviews & Podcasts

When recording interviews or podcasts, you need tools that can handle real-world audio — multiple speakers, background noise, accent variations, and more. These are some of the top AI transcription software options that perform well in such settings. I’ve included strengths & limitations, especially regarding real-time transcription tools review and handling audio with noise.

1. Otter.ai

- Strengths: Offers real-time transcription, speaker identification, and integrates with Zoom, Microsoft Teams etc. It performs decently even with some background noise.

- Weaknesses: In very noisy environments (crowds, overlapping voices), error rates increase. Also, non-English accents sometimes get mistranscribed.

2. Whisper by OpenAI

- Strengths: Works offline, supports many languages, good at handling multiple accents and background noise thanks to its large, diverse training dataset. Good option if you want privacy and control.

- Weaknesses: Requires some technical setup for best performance. Not always the best UI for editing or speaker tagging out of the box.

3. Krisp

- Strengths: Has strong noise-cancellation / background noise reduction in transcription features. Great for meetings or interviews when you want to reduce ambient sounds. Free tier offerings make it accessible.

- Weaknesses: While noise suppression is good, transcription quality still drops when noise is too similar to speech or in very chaotic environments.

4. Looppanel

- Strengths: Designed for interview transcription & qualitative research. Claims 90%+ accuracy, supports many languages, has tools for tag / find important moments. Useful when you want more than just raw transcript (analysis, highlights).

- Weaknesses: Premium features may cost. Also, audio quality still matters a lot — it’s not immune to errors with heavy background noise.

5. Trint, Rev, Speechmatics (+ Others)

- Strengths: Many of these offer powerful editors, speaker separation, good handling of regional accent variation, and features like time-stamped transcripts. They tend to fare better than simpler tools when the audio quality is high to moderate. DigitalOcean

- Weaknesses: In noisy recordings (crowd noise, overlapping speech, echo), you’ll still see issues. Manual corrections often needed. Also cost rises with usage, especially for longer audio.

✅ Summary Tips on Choosing

- Pick a tool with speaker identification if multiple people talk.

- Look for noise cancellation / background noise reduction features.

- Try offline modes or tools that allow you to pre-process audio (e.g. remove noise, normalise volume) before transcription.

- For podcasts & interviews, tools that allow editing of the transcript (correcting misheard words, splitting speakers) are very helpful.

- Always test your tool with sample noisy recordings (similar environment to your podcasts or interviews) before fully committing.

AI Transcription Tools – Product & Pricing

1. Otter.ai

- Free plan available

- Pro: $8.33 per month (billed annually)

- Business: $20 per month per user

2. Whisper (OpenAI / self-hosted)

- If you self-host: free, only computer/GPU cost

- If using API: around $0.006 per minute (~$0.36 per hour)

3. Rev

- AI transcription: $0.25 per minute (~$15 per hour)

- Human transcription: $1.70–$1.99 per minute (~$102–$119 per hour)

4. Speechmatics

- Batch transcription: about $0.24 per hour (standard)

- Realtime transcription: about $0.32–$0.56 per hour (depends on accuracy level)

5. Looppanel

- Solo plan: $30 per month (about 10 hours included → ~$3 per hour)

- Team plan: starts at $350 per month (more hours + team features)

👉 This way, your readers can instantly see:

- Which ones are subscription-based (Otter, Looppanel)

- Which ones are pay-per-use (Rev, Whisper, Speechmatics)

- Which ones are cheap vs premium

AI Transcription Tools – Pricing & Best Use Cases

| Product | Pricing | Best For |

| Otter.ai | Free plan; Pro $8.33/month; Business $20/user/month | Students, podcasters, small teams who want easy-to-use transcription with a subscription |

| Whisper | Self-hosted: free (just computer cost); API: ~$0.006/min (~$0.36/hr) | Cheapest bulk transcription; tech-savvy users who can handle setup |

| Rev (AI) | $0.25 per minute (~$15/hour) | Quick, affordable AI transcription with moderate accuracy in noisy audio |

| Rev (Human) | $1.70–$1.99 per minute (~$102–$119/hour) | Highly accurate transcription for legal, medical, or important recordings |

| Speechmatics | $0.24–$0.56 per hour (depending on standard or realtime accuracy level) | Enterprises needing multilingual or realtime transcription |

| Looppanel | $30/month for ~10 hours (~$3/hour); Team plans from $350/month | Researchers needing transcription plus tagging, highlights, and analysis |

Error Rate in AI Transcription Tools

One of the best ways to measure how good a transcription tool is involves looking at its error rate. In the transcription world, this is known as Word Error Rate (WER). The lower the WER, the more accurate the transcript.

In quiet conditions, most AI transcription software can reach a WER of around 5%–15%, which means they get 85–95 words correct out of every 100. However, in noisy audio environments, this number can rise sharply, sometimes going beyond 25% or more. That means a quarter of your words might be wrong or missing.

For example, automatic speech recognition accuracy tends to drop when multiple people are speaking at once. Voice-to-text tools for multiple speakers often confuse who said what, which increases the number of mistakes. Similarly, heavy background noise like traffic, clapping, or echoes makes it difficult for AI to identify words correctly.

Compared to this, human transcription accuracy usually stays high even in noisy conditions, because humans can use context to guess muffled words or interpret unclear phrases. While AI may output random text when it’s unsure, a human can still make a logical guess.

The good news is that modern systems trained with machine learning for speech recognition are improving quickly. Tools like Whisper and Speechmatics, for example, are designed to handle more noise than older software. Still, understanding the transcription error rate in noisy audio is key before deciding which tool is right for you.

How to Improve Transcription Accuracy in Noisy Conditions

Even the best AI transcription tools can struggle with noisy audio, but the good news is there are ways to improve results. With the right setup and strategies, you can reduce the transcription error rate and get more accurate transcripts.

1. Use Quality Recording Equipment

A good microphone makes a big difference. Built-in laptop or phone mics often capture too much background noise. Using a dedicated mic with noise-cancellation improves speech recognition accuracy from the start.

2. Reduce Noise Before Transcription

Pre-processing your audio with software like Krisp or Audacity helps. These tools apply background noise reduction in transcription, making the speech clearer before you feed it into an AI tool.

3. Choose Tools with Machine Learning for Speech Recognition

Modern platforms like Whisper, Otter, and Speechmatics use advanced models trained on diverse datasets. They perform better with noisy environment audio transcription compared to older systems.

4. Enable Speaker Identification

When using voice-to-text tools for multiple speakers, choose ones with speaker labeling features. This reduces confusion and makes the transcript more readable.

5. Record in Better Environments

If possible, minimize background noise—close doors, avoid crowded spaces, or record in a quiet room. Small steps can greatly improve automatic speech recognition accuracy.

Use Cases of AI for Call Center Recordings & Business Meetings

AI transcription is not just for podcasts or students—it’s becoming a game changer for businesses too. In industries where conversations are constant, like customer support or team collaboration, AI transcription tools bring real value.

1. Call Centers

Companies handle thousands of calls every day. With AI for call center recordings, managers can automatically generate transcripts of conversations. This helps in quality monitoring, training new agents, and analyzing customer feedback. Instead of listening to long recordings, supervisors can quickly search transcripts for keywords. Even with noisy audio, tools using machine learning for speech recognition can capture the main ideas accurately.

2. Business Meetings

During team meetings, especially virtual ones, people often talk over each other. Voice-to-text tools for multiple speakers with speaker identification make it easy to know who said what. Tools like Otter.ai integrate directly with Zoom or Microsoft Teams, providing real-time transcription that improves productivity.

3. Accessibility & Compliance

In both call centers and meetings, transcription makes content more accessible. AI captioning tools with noise handling help employees who are hard of hearing. For regulated industries (like finance or healthcare), keeping accurate speech-to-text records ensures compliance and reduces risks.

Final Verdict – Are AI Transcription Tools Reliable for Noisy Audio?

So, the big question: Can you really trust AI transcription tools when dealing with noisy audio? The answer is both yes and no.

In ideal conditions—quiet rooms, clear speakers, and good equipment—AI transcription accuracy can be very high, often close to human-level performance. Tools like Whisper, Otter, and Speechmatics have made huge improvements with machine learning for speech recognition, reducing mistakes and handling different accents better than older systems.

However, when you introduce noisy audio, overlapping voices, or poor recording quality, the transcription error rate increases. Even advanced systems with background noise reduction in transcription may mishear words or mix up speakers. This is where human vs AI transcription accuracy still shows a clear gap. Humans can use context and meaning to guess muffled words, while AI sometimes produces random text.

That said, AI tools are becoming more reliable every year. For everyday tasks like note-taking, podcasts, interviews, or AI for call center recordings, they save time and money. For mission-critical work—like legal, medical, or compliance transcripts—human transcription is still the safer choice.

👉 Final tip: If you combine smart recording habits, noise reduction software, and the right AI tool, you can get surprisingly accurate results even in noisy conditions. The key is to match the tool with your needs—AI for speed and cost, humans for guaranteed accuracy.

FAQs on AI Transcription Accuracy in Noisy Audio

1. Can AI transcribe noisy audio accurately?

Yes, but with limitations. AI transcription tools can handle light background noise fairly well, especially advanced ones like Whisper or Speechmatics. However, when there’s heavy chatter, traffic, or overlapping voices, the transcription error rate in noisy audio often rises.

2. Which AI transcription tool works best for noisy environments?

Tools like Whisper (trained on diverse datasets) and Otter.ai (with real-time transcription tools review features) perform better than older software. Adding background noise reduction in transcription through apps like Krisp can also improve results.

3. Are AI transcription tools better than humans in noisy conditions?

No. While automatic speech recognition accuracy is improving, human vs AI transcription accuracy still shows a gap. Humans can use context to guess unclear words, while AI may produce random or incorrect text.

4. How can I improve transcription accuracy for noisy audio?

Use a good microphone, record in quiet spaces if possible, and pre-process audio with noise reduction software. Choosing tools with speaker identification and machine learning for speech recognition also boosts accuracy.

5. Are there affordable transcription options for students and small creators?

Yes. Otter.ai offers a free plan and low-cost subscriptions, while Whisper API costs only about $0.36 per hour. These are great affordable transcription tools for students and solo podcasters.